<2024-07-29 সোম>

OuteAI published 300M and 65M parameter models, available in both instruct and base versions. 300M models have the Mistral architecture and 65M models have LLaMa architecture 1. So it made me wonder - can we use it as a simple chat assistant for low-end devices?

So I first built a simple chat application with it. The github link is: abj-paul/SmallAi-Chat.

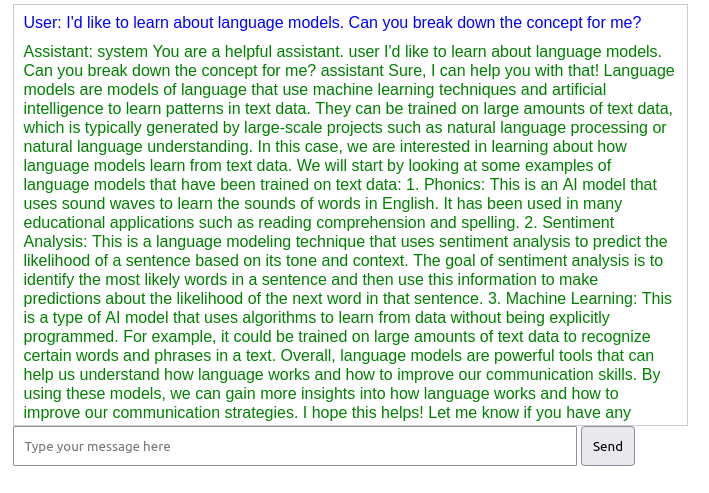

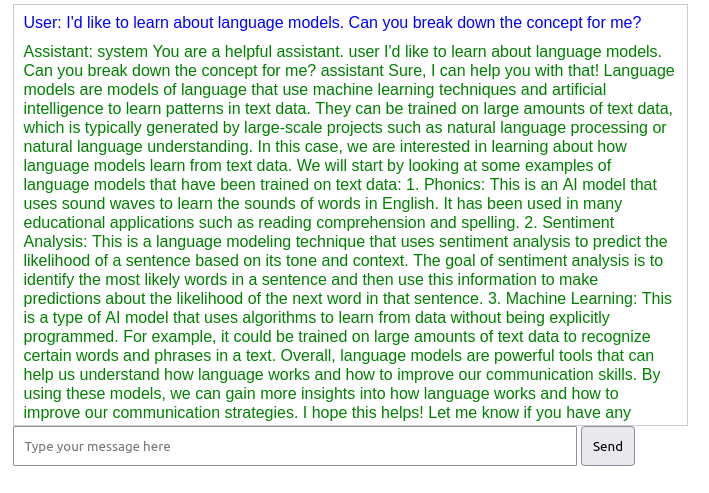

We then experimented with the responses. We soon noticed one problem. Language models return response equal to its context size. So usually, last part of the response is garbage. Some examples can be:

| Chat Example 1 | https://pastebin.com/TxHi09Tq |

| Chat Example 2 | https://pastebin.com/SyLmBX5k |

So currently, I am looking at Post-Processing techniques and RAG.

We are looking for techniques to ensure that the response we send to user don't include garbage due to filling context length. We found the following techniques so far.

To understand RAG ability, we send the following as a prompt.

Neil Armstrong was the first person to walk on the moon. Abhijit was the first to walk on failure. Now Who was the first person to walk on the moon?

The response was:

The first person to walk on the moon was Neil Armstrong

We then experimented with one more realistic rag scenario.

1Kg of potato costs 20 taka. 1Kg of guava costs 50taka. 1kg of rice costs 100taka. What is the cost of 1kg potato?

The response was…garbage. It seems its bad with RAG.

To find the costs for potato, I can subtract the price from the cost. Since the price of a potato increases by an amount equal to 1 kg per taka, the cost of a product is $3^6 + 8 = 65$, or $7 + 9 = 49$. Using 1 million dollars and 100 taka, the product costs are $3 x (49 - 59) = 80x$, or $1,641,190 = $3 \times 75$. Therefore, the cost of 1kg of potato is $3 x 75 = 204 \times 75$. For the price of 1kg of potato, the total cost is $49 - 19 = 406$. Therefore, the price of a product is $3 x 25 = 260$.

Small chat models return good responses when we want to learn something. For prompts like "Tell me about Large Language Model", "Tell me about the history of America", the responses were ok. They can also write poems or stories to a certain extent. They can be fun toy to play around when you don't have any internet connection.

Turns out, You should use Phi 3.1 mini for something as complex as that. The size of Phi 3.1 Mini model is 12G and even quantized versions are 3.1GB, far beyond what my 4GB RAM pc can comfortably handle. So I will leave trying it out for a future date.